The Last Responsible Moment

When we develop a software product, we make decisions. We decide about individual features, we make design decisions, we make coding decisions, we even decide which bugs we really want to fix before going public. Some decisions are taken on the fly; some, at least in the old school, are somewhat planned.

A key principle of Lean Development is to delay decisions, so that:

a) decisions can be based on (yet-to-discover) facts, not on speculation

b) you exercise the wait option (more on this below) and avoid early commitment

The principle is often spelled as "Delay decisions until the last responsible moment", but a quick look at Mary Poppendieck's website (Mary co-created the Lean Development approach) shows a more interesting nuance: "Schedule Irreversible Decisions at the Last Responsible Moment".

Defining "Irreversible" and "Last Responsible" is not trivial. In a sense, there is nothing in software that is truly irreversible, because you can always start over. I haven't found a good definition for "irreversible decision" in literature, but I would define it as follows: if you make an irreversible decision at time T, undoing the decision at a later time will entail a complete (or almost complete) waste of everything that has been created after time T.

There are some documented definitions for "last responsible moment". A popular one is "The point when failing to decide eliminates an important option", which I found rather unsatisfactory. I've also seen some attempts to quantify that better, as in this funny story, except that in the real world you never have a problem which is that simple (very few ramifications in the decision graph) and that detailed (you know the schedule beforehand). I would probably define the Last Responsible Moment as follows: time T is the last responsible moment to make a decision D if, by postponing D, the probability of completing on schedule/budget (even when you factor-in the hypothetical learning effect of postponing) decreases below an acceptable threshold. That, of course, allows us to scrap everything and restart, if schedule and budget allows for it, and in this sense it's kinda coupled with the definition of irreversible.

Now, irreversibility is bad. We don't want to make irreversible decisions. We certainly don't want to make them too soon. Is there anything we can do? I've got a few important things to say about modularity vs. irreversibility and passive vs. proactive option thinking, but right now, it's useful to recap the major decision areas within a software project, so that we can clearly understand what we can actually delay, and what is usually suggested that we delay.

Major Decision Areas

I'll skip on a few very-high-level, strategic decisions here (scope, strategy, business model, etc). It's not that they can't be postponed, but I need to give some focus to this post :-). So I'll get down to the more ordinarily taken decisions.

People

Choosing the right people for the project is a well-known ingredient for success.

Approach/Process

Are we going XP, Waterfall, something in between? :-).

Feature Set

Are we going to include this feature or not?

Design

What is the internal shape (form) of our product?

Coding

Much like design, at a finer granularity level.

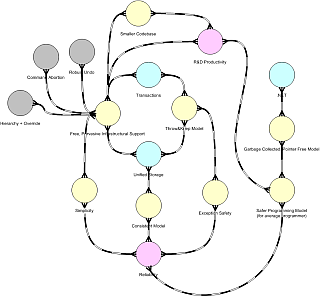

Now, "design" is an overly general concept. Too general to be useful. Therefore, I'll split it into a few major decisions.

Architectural Style

Is this going to be an embedded application, a rich client, a web application? This is a rather irreversible decision.

Platform

Goes somewhat in pair with Architectural Style. Are we going with an embedded application burnt into an FPGA? Do you want to target a PIC? Perhaps an embedded PC? Is the client a Windows machine, or you want to support Mac/Linux? A .NET server side, or maybe Java? It's all rather irreversible, although not completely irreversible.

3rd-Party Libraries/Components/Etc

Are we going to use some existing component (of various scale)? Unless you plan on wrapping everything (which may not even be possible), this often end up being an irreversible decision. For instance, once you commit yourself to using Hibernate for persistence, it's not trivial to move away.

Programming Language

This is the quintessential irreversible decision, unless you want to play with language converters. Note that this is not a coding decisions: coding decisions are made after the language has been chosen.

Structure / Shape / Form

This is what we usually call "design": the shape we want to impose to our material (or, if you live in the "emergent design" side, the shape that our material will take as the final result of several incremental decisions).

So, what are we going to delay? We can't delay all decisions, or we'll be stuck. Sure, we can delay something in each and every area, but truth is, every popular method has been focusing on just a few of them. Of course, different methods tried to delay different choices.

A Little Historical Perspective

Experience brings perspective; at least, true experience does :-). Perspective allows to look at something and see more than it's usually seen. For instance, perspective allows to look at the old, outdated, obsolete waterfall approach and see that it (too) was meant to delay decisions, just different decisions.

Waterfall was meant to delay people decisions, design decisions (which include platform, library, component decisions) and coding decisions. People decision was delayed by specialization: you only have to pick the analyst first, everyone else can be chosen later, when you know what you gotta do (it even makes sense -)). Design decision was delayed because platform, including languages, OS, etc, were way more balkanized than today. Also, architectural styles and patterns were much less understood, and it made sense to look at a larger picture before committing to an overall architecture.

Although this may seem rather ridiculous from the perspective of a 2010 programmer working on Java corporate web applications, most of this stuff is still relevant for (e.g.) mass-produced embedded systems, where choosing the right platform may radically change the total development and production cost, yet choosing the wrong platform may over-constrain the feature set.

Indeed, open systems (another legacy term from late '80s - early '90s) were born exactly to lighten up that choice. Choose the *nix world, and forget about it. Of course, the decision was still irreversible, but granted you some latitude in choosing the exact hw/sw. The entire multi-platform industry (from multi-OS libraries to Java) is basically built on the same foundations. Well, that's the bright side, of course :-).

Looking beyond platform independence, the entire concept of "standard" allows to delay some decision. TCP/IP, for instance, allows me to choose modularly (a concept I'll elaborate later). I can choose TCP/IP as the transport mechanism, and then delay the choice of (e.g.) the client side, and focus on the server side. Of course, a choice is still made (the client must have TCP/IP support), so let's say that widely adopted standards allow for some modularity in the decision process, and therefore to delay some decision, mostly design decisions, but perhaps some other as well (like people).

It's already going to be a long post, so I won't look at each and every method/principle/tool ever conceived, but if you do your homework, you'll find that a lot of what has been proposed in the last 40 years or so (from code generators to MDA, from spiral development to XP, from stepwise refinement to OOP) includes some magic ingredient that allows us to postpone some kind of decision.

It's 2010, guys

So, if you ain't agile, you are clumsy :-)) and c'mon, you don't wanna be clumsy :-). So, seriously, which kind of decisions are usually delayed in (e.g.) XP?

People? I must say I haven't seen much on this. Most literature on XP seems based on the concept that team members are mostly programmers with a wide set of skills, so there should be no particular reason to delay decision about who's gonna work on what. I may have missed some particularly relevant work, however.

Feature Set? Sure. Every incremental approach allows us to delay decisions about features. This can be very advantageous if we can play the learning game, which includes rapid/frequent delivery, or we won't learn enough to actually steer the feature set.

Of course, delaying some decisions on feature set can make some design options viable now, and totally bogus later. Here is where you really have to understand the concept of irreversible and last responsible moment. Of course, if you work on a settled platform, things get simpler, which is one more reason why people get religiously attached to a platform.

Design? Sure, but let's take a deeper look.

Architectural Style: not much. Quoting Booch, "agile projects often start out assuming a given platform and environmental context together with a set of proven design patterns for that domain, all of which represent architectural decisions in a very real sense". See my post Architecture as Tradition in the Unselfconscious Process for more.

Seriously, nobody ever expected to start with a monolithic client and end up with a three-tier web application built around a MVC pattern just by coding and refactoring. The architectural style is pretty much a given in many contemporary projects.

Platform: sorry guys, but if you want to start coding now, you gotta choose your platform now. Another irreversible decision made right at the beginning.

3rd-Party Libraries/Components/Etc: some delay is possible for modularized decisions. If you wanna use hibernate, you gotta choose pretty soon. If you wanna use Seam, you gotta choose pretty soon. Pervasive libraries are so entangled with architectural styles that it's relatively hard to delay some decisions here. Modularized components (e.g. the choice of a PDF rendering library) are simple to delay, and can be proactively delayed (see later).

Programming Language: no way guys, you have to choose right here, right now.

Structure / Shape / Form: of course!!! Here we are. This is it :-). You can delay a lot of detailed design choices. Of course, we always postpone some design decision, even when we design before coding. But let's say that this is where I see a lot of suggestions to delay decisions in the agile literature, often using the dreaded Big Upfront Design as a straw man argument. Of course, the emergent design (or accidental architecture) may or may not be good. If I had to compare the design and code coming out of the XP Episode with my own, I would say that a little upfront design can do wonders, but hey, you know me :-).

Practicing

OK guys, what follows may sound a little odd, but in the end it will prove useful. Have faith :-).

You can get better at everything by doing anything :-), so why not getting better at delaying decisions by playing Windows Solitaire? All you have to do is set the options in the hardest possible way:

now, play a little, until you have to make some decision, like here:

I could move the 9 of spades or the 9 of clubs over the 10 of hearts. It's an irreversible decision (well, not if you use the undo, but that's lame :-). There are some ramifications for both choices.

If I move the 9 of clubs, I can later move the king of clubs and uncover a new card. After that, it's all unknown, and no further speculation is possible. Here, learning requires an irreversible decision; this is very common in real-world projects, but seldom discussed in literature.

If I move the 9 of spades, I uncover the 6 of clubs, which I can move over the 7 of aces. Then, it's kinda unknown, meaning: if you're a serious player (I'm not) you'll remember the previous cards, which would allow you to speculate a little better. Otherwise, it's just as above, you have to make an irreversible decision to learn the outcome.

But wait: what about the last responsible moment? Maybe we can delay this decision! Now, if you delay the decision by clicking on the deck and moving further, you're not delaying the decision: you're wasting a chance. In order to delay this decision, there must be something else you can do.

Well, indeed, there is something you can do. You can move the 8 of aces above the 9 of clubs. This will uncover a new card (learning) without wasting any present opportunity (it could still waste a future opportunity; life it tough). Maybe you'll get a 10 of aces under that 8, at which point there won't be any choice to be made about the 9. Or you might get a black 7, at which point you'll have a different way to move the king of clubs, so moving the 9 of spades would be a more attractive option. So, delay the 9 and move the 8 :-). Add some luck, and it works:

and you get some money too (total at decision time Vs. total at the end)

Novice solitaire players are also known to make irreversible decision without necessity. For instance, in similar cases:

I've seen people eagerly moving the 6 of aces (actually, whatever they got) over the 7 of spades, because "that will free up a slot". Which is true, but irrelevant. This is a decision you can easily delay. Actually, it's a decision you must delay, because:

- if you happen to uncover a king, you can always move the 6. It's not the last responsible moment yet: if you do nothing now, nothing bad will happen.

- you may uncover a 6 of hearts before you uncover a king. And moving that 6 might be more advantageous than moving the 6 of aces. So, don't do it :-). If you want to look good, quote Option Theory, call this a Deferral Option and write a paper about it :-).

Proactive Option Thinking

I've recently read an interesting paper in IEEE TSE ("An Integrative Economic Optimization Approach to Systems Development Risk Management", by Michel Benaroch and James Goldstein). Although the real meat starts in chapter 4, chapters 1-3 are probably more interesting for the casual reader (including myself).

There, authors recap some literature about Real Options in Software Engineering, including the popular argument that delaying decisions is akin to a deferral option. They also make important distinctions, like the one between passive learning through deferral of decisions, and proactive learning, but also between responsiveness to change (a central theme in agility literature) and manipulation of change (relatively less explored), and so on. There is a a lot of food for thought in those 3 chapters, so if you can get a copy, I suggest that you spend a little time pondering over it.

Now, I'm a strong supporter of Proactive Option Thinking. Waiting for opportunities (and then react quickly) is not enough. I believe that options should be "implanted" in our project, and that can be done by applying the right design techniques. How? Keep reading : ).

The Invariant Decision

If you look back at those pictures of Solitaire, you'll see that I wasn't really delaying irreversible decisions. All decisions in solitaire are irreversible (real men don't use CTRL-Z). Many decisions in software development are irreversible as well, especially when you are in a tight budget/schedule, so starting over is not an option. Therefore, irreversibility can't really be the key here. Indeed, I was trying to delay Invariant Decisions. Decisions that I can take now, or I can take later, with little or no impact on the outcomes. The concept itself may seem like a minor change from "irreversible", but it allows me to do some magic:

- I can get rid of the "last responsible moment" part, which is poorly defined anyway. I can just say: delay invariant decisions. Period. You can delay them as much as you want, provided they are still invariant. No ambiguity here. That's much better.

- I can proactively make some decisions invariant. This is so important I'll have to say it again, this time in bold: I can proactively make some decisions invariant.

Invariance, Design, Modularity

If you go back to the Historical Perspective paragraph, you can now read it under a different... perspective :-). Several tools, techniques, methods can be adopted not just to delay some decision, but to create the option to delay the decision. How? Through careful design, of course!

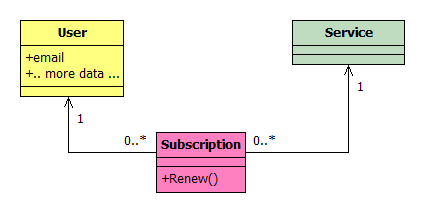

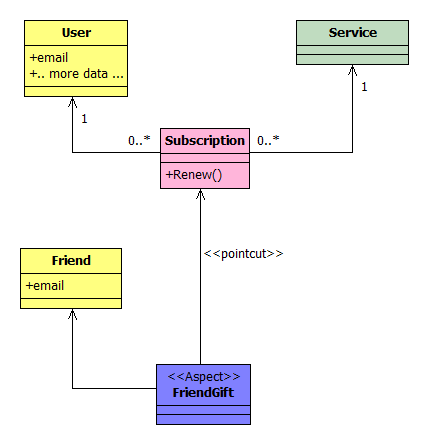

Consider the strong modularity you get from service-oriented architecture, and the platform independence that comes through (well-designed) web services. This is a powerful weapon to delay a lot of decisions on one side or another (client or server).

Consider standard protocols: they are a way to make some decision invariant, and to modularize the impact of some choices.

Consider encapsulation, abstraction and interfaces: they allow you to delay quite a few low-level decisions, and to modularize the impact of change as well. If your choice turn out to be wrong, but it's highly localized (modularized) you may afford undoing your decision, therefore turning irreversible into reversible. A barebone example can be found in my old post (2005!) Builder [pattern] as an option.

Consider a very old OOA/OOD principle, now somehow resurrected under the "ubiquitous language" umbrella. It states that you should try to reflect the real-world entities that you're dealing with in your design, and then in your code. That includes avoiding primitive types like integer, and create meaningful classes instead. Of course, you have to understand what you're doing (that is, you gotta be a good designer) to avoid useless overengineering. See part 4 of my digression on the XP Episode for a discussion about adding a seemingly useless Ball class (that is: implanting a low cost - high premium option).

Names alter the forcefield. A named concept stands apart. My next post on the forcefield theme, by the way, will explore this issue in depth :-).

And so on. I could go on forever, but the point is: you can make many (but not all, of course!) decisions invariant, if you apply the right design techniques. Most of those techniques will also modularize the cost of rework if you make the wrong decision. And sure, you can try to do this on the fly as you code. Or you may want to to some upfront design. You know what I'm thinking.

OK guys, it took quite a while, but now we have a new concept to play with, so more on this will follow, randomly as usual. Stay tuned.